Ollama and Open-WebUI

This page explains how to run Ollama and Open-WebUI.

Ollama will run on ds01 while Open-WebUI will run on your own machine. We will use SSH port-forwarding to connect your local instance of Open-WebUI to Ollama.

Run Ollama in Slurm

This step is done ds01

Ollama comes preinstalled on ds01. To avoid interfering with other users and enable Ollama to use GPUs, Ollama must be launched through the Slurm scheduler. A sample script is available in our Github repository.

We suggest to clone the entire repository with the Slurm script, cf.

You can then find the Slurm script at ~/src/ds01-examples/slurm/ollama_serve.job.

It will look like this:

#!/bin/bash

#SBATCH --output /home/volkerh/logs/slurm/ollama_serve_%j.out

#SBATCH --job-name ollama/serve

#SBATCH --partition sintef

#SBATCH --ntasks 1

#SBATCH --mem=2GB

#SBATCH --cpus-per-task=1

#SBATCH --gres gpu:a30:1

#SBATCH --time 00-01:00:00

echo ""

echo "***** LAUNCHING *****"

date '+%F %H:%M:%S'

echo ""

export OLLAMA_HOST=127.0.0.1:31337

# DATE=$(date +%F_%H%M)

# srun ollama serve > "/home/volkerh/logs/ollama/ollama_serve_${SLURM_JOB_ID}_${DATE}.log" 2>&1

srun ollama serve 2>&1

echo ""

echo "***** DONE *****"

date '+%F %H:%M:%S'

echo ""

You will need to make a few adjustments:

- Change the paths to the correct username (change

/home/volkerhto/home/YOUR_USER) - Change the port Ollama will expose (change

export OLLAMA_HOST=127.0.0.1:31337toexport OLLAMA_HOST=127.0.0.1:SOME_NUMBER)

Optionally, you can also make a few more adjustment:

- Allow for more CPU cores (e.g., change

--cpus-per-task=1to--cpus-per-task=2) - Allow for more RAM cores (e.g., change

--mem=2GBto--mem=32GB). This should be at least equivalent to the size of the model you wish to use. - Allows for longer runtime (e.g., change

--time 00-01:00:00to--time 00-06:00:00to allow six hours of runtime).

Runtime

The maximum permitted runtime is 24 hours.

Memory

Your choice of LLM model will guide the amount of memory you need. You should at least request enough memory so that the entire model can be loaded. For models running on our NVIDIA A30 GPUs, asking for more than 24 GB does not make sense. If you want to do inference on the CPU, you can load larger models.

Finally, make sure that the directory /home/YOUR_USER/logs/slurm/ exists.

Once you have adjusted the Slurm script, you can submit the job as follows:

You can check that the job is running as follows:

$ sq

JOBID USER PARTITION NAME EXEC_HOST ST REASON TIME TIME_LEFT CPUS MIN_MEMORY TRES_PER_NOD

152 volkerh sintef ollama/serve ds01 R None 23:28 5:36:32 2 32G gres:gpu:a30

You can also monitor the log to see what's going on:

$ tail -f ~/logs/slurm/ollama_serve_152.out

time=2025-01-28T11:21:47.306+01:00 level=INFO source=routes.go:1267 msg="Dynamic LLM libraries" runners="[cpu cpu_avx cpu_avx2 cuda_v11_avx cuda_v12_avx rocm_avx]"

time=2025-01-28T11:21:47.307+01:00 level=INFO source=gpu.go:226 msg="looking for compatible GPUs"

time=2025-01-28T11:21:47.827+01:00 level=INFO source=types.go:131 msg="inference compute" id=GPU-dfa16a8e-aad3-29a9-20b7-92bfab2d5836 library=cuda variant=v12 compute=8.0 driver=12.7 name="NVIDIA A30" total="23.6 GiB" available="23.4 GiB"

[...]

Install Open-WebUI

This step is done on your local machine

Assuming you have installed Miniforge or similar to get a working Conda environment, you can install Open-WebUI as follows:

See also the official documentation.

Establish an SSH Tunnel

This step is done on your local machine

In a terminal, run

where you need to change 31337 to the port number you have used in the Slurm script above.

If you now navigate to http://localhost:31337 in your browser, you should be greeted by the Ollama API running.

Launch and Access Open-WebUI

This step is done on your local machine

Finall, we launch Open-WebUI, but make sure it connects to the port we have forwarded from localhost to ds01. We also disable creation of user accounts. We start Open-WebUI as follows:

$ conda activate open-webui

$ OLLAMA_BASE_URL=http://localhost:31337 WEBUI_AUTH=false open-webui serve

Again, make sure you change the port 31337 to the one you selected.

This will start generating quite some logging output. Eventually, you will see the banner and some message indicating that the frontend is now accessible, viz.

[...]

___ __ __ _ _ _ ___

/ _ \ _ __ ___ _ __ \ \ / /__| |__ | | | |_ _|

| | | | '_ \ / _ \ '_ \ \ \ /\ / / _ \ '_ \| | | || |

| |_| | |_) | __/ | | | \ V V / __/ |_) | |_| || |

\___/| .__/ \___|_| |_| \_/\_/ \___|_.__/ \___/|___|

|_|

v0.5.7 - building the best open-source AI user interface.

https://github.com/open-webui/open-webui

[...]

INFO: Started server process [11408]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8080 (Press CTRL+C to quit)

[...]

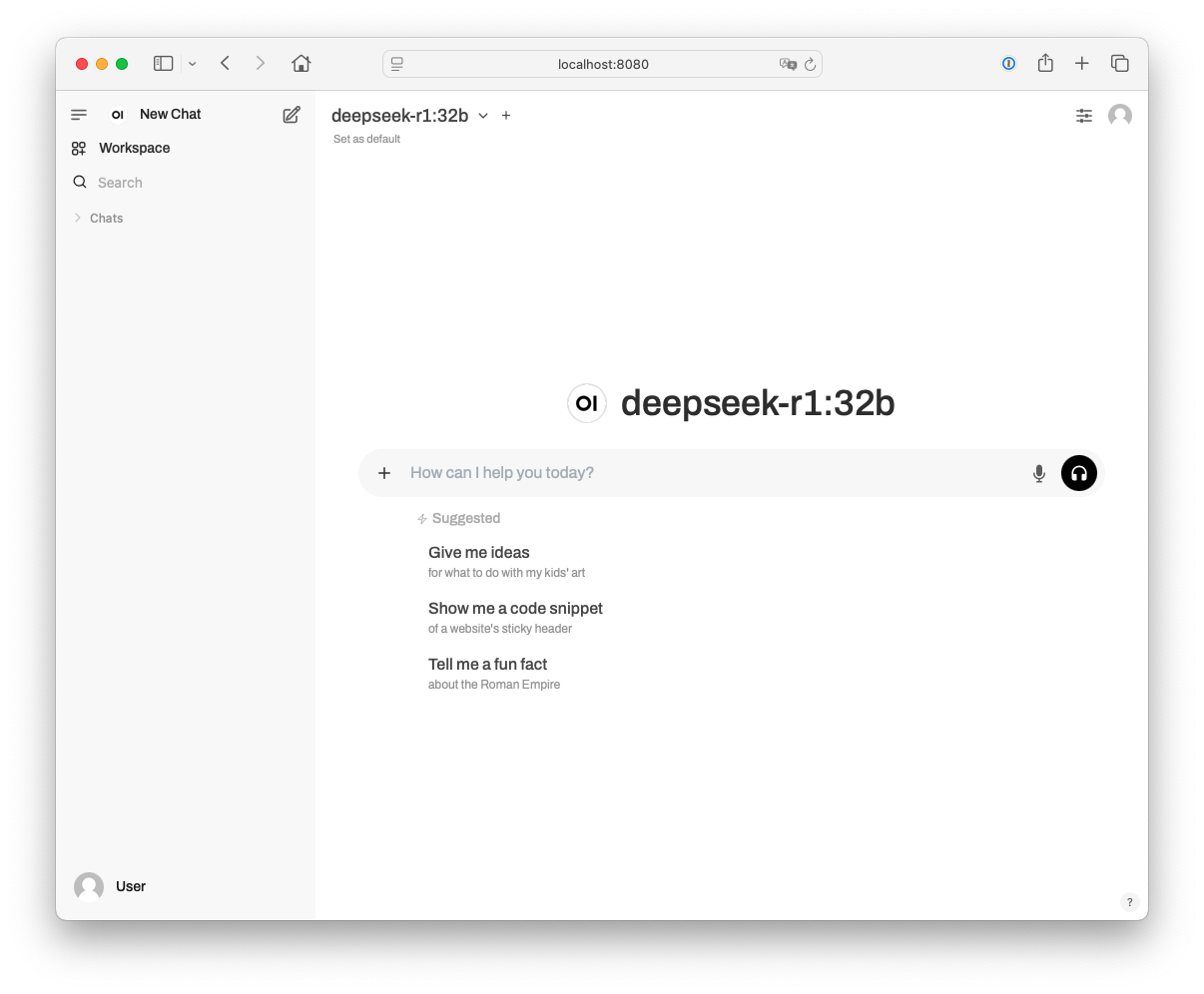

If you navigate to http://localhost:8080, you should see something like the screenshot at the top.